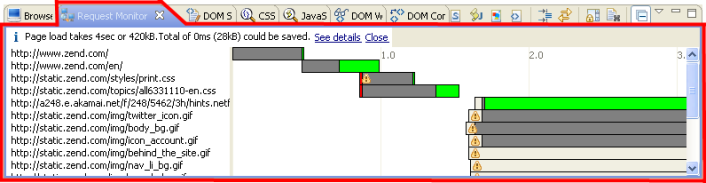

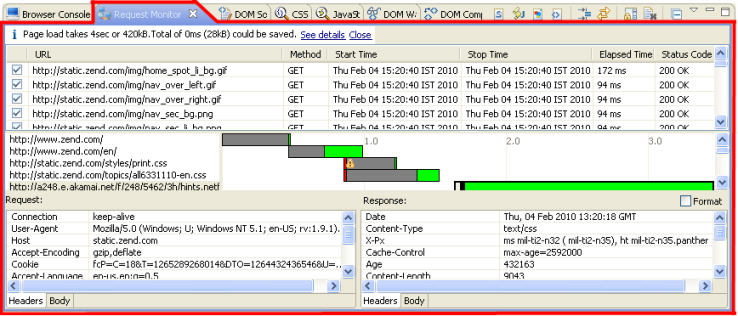

This procedure describes how to show the request/response Content panel.

This panel provides additional information to the Request Monitor view.

For more information see Response/Request

Panel.

The request/response panel adds a window to the top and to the bottom

of the view. The top window is the same information as the original view

but in columns.

The bottom window separates the request and response into two tables.

If you select a URL in the middle window you can see the details of the

request and response separately. The details are separate into Headers

and Body.

|

Rule

|

Description

|

|

Avoid

Redirects

|

Detects a response

with a 301 or 302 status. If the browser has to follow any redirects

before entering the main page, it cannot do anything else simultaneously.

The extreme case is a "redirect chain", where one URL

redirects to another redirect. The example below illustrates a

"redirect chain and the extra cost of it":

google.com -> www.google.com -> www.google.pl

(2 requests and 0,4sec, 2kB total transfer in/out).

|

|

Combine

External CSS, Images, or JavaScript

|

Detects more

than one download of a CSS, Image or JavaScript

file. Many small resources of the same type may take longer to

load than a single bigger resource. Browsers try to minimize the

time required to load many resources by parallelizing downloads

as much as possible. Parallel downloads do save time, however

they don't save bandwidth. Assuming that a typical small resource

is 1kb big and a typical GET request/response headers size is

1kb, a download of 10 small resources costs 10*(resourceSize+headersSize)

= 10*(1+1) = 20kb of bandwidth. If all resources were replaced

into a single header, it would instead cost 11kb. This saves 45%

of bandwidth.

Merging multiple images into a single sprite could result in

even smaller images because of how images are represented internally.

For example, if all images use similar colors, they would use

a single shared palette instead of many separate palettes.

|

|

CSS

Expression or Filter Use

|

Check if "expression(...)"

or "filter: alpha(...)" is used. They slowdown rendering

because an expression has to be evaluated at all times (on scroll,

re-size, and load). The Alpha filter is just slow, according to

YSlow.

|

|

Unefficient

CSS Selector

|

Check if any

used selector uses global qualifier ("*"). Universal

selectors take more time to apply because they have to be applied

to all "document" DOM

elements. Based on https://developer.mozilla.org/en/Writing_Efficient_CSS

|

|

Unused

CSS Rule

|

Walks over DOM

"document" to find the CSS rules referenced in

DOM nodes (via Mozilla API). Next parses all loaded CSS files

to find all loaded rules. List rules that were loaded but never

used. For a large web site it's easy to lose control of CSS and

keep constantly adding styles, without removing them to not break

something. This rule would help maintain minimal CSS rules.

|

|

Unused

CSS File

|

Check if any

of the rules defined in the CSS file are referred to in the HTML

document.

|

|

Gzip

Contents

|

Check if the

response uses Content-Encoding: gzip header. gZip compression

saves bandwidth.

|

|

Leverage

Browser Caching

|

Check if the

response contains an "Expires" or "Cache-Control"

header. Caching significantly reduces the amount of necessary

downloads.

|

|

Minimize

Cookie Size

|

Check the length

of a requests' "Cookie" header. The average request

should be no bigger than 1500 bytes which allows it to fit into

one packet. Too big of a cookie can easily break that number,

causing the request take more packets. Google suggests to use

cookies no longer than 1000 bytes and recommends up to 400 bytes.

See http://code.google.com/intl/pl-PL/speed/page-speed/docs/request.html#MinimizeCookieSize

|

|

Minimize

the Number of IFrames

|

Yahoo recommends

reducing up to 5 IFrames per web page. There is no clear evidence

on how more IFrames contribute to performance loss.

|

|

No

404s

|

Detects responses

with a 404 status. When opening a web page it's not immediately

visible if parts of it are missing due to a 404 response from

the server.

|

|

Optimize

CSS and JavaScript Order

|

Parses DOM "document"

to find out if there are any LINK tags referring to CSS after

SCRIPT tags referring to external JavaScript files. This is not

a problem for modern browsers anymore because they are able to

download both CSS and JavaScript resources at the same time. Still,

when JavaScript is executed,

any other actions is blocked because usually JavaScript

execution occurs in the main browser thread.

Below is the link to a Google Page-Speed diagram that shows

a hypothetical situation: http://code.google.com/intl/pl/speed/page-speed/docs/rtt.html#PutStylesBeforeScripts

They are in the following order: CSS file, JavaScript file,

JavaScript file, CSS file. Requests are handled by a servlet that

by default delays the response for about 1sec in order to simulate

the network load.

|

|

Parallelize

Downloads Across Domains

|

Checks if the

requests are more or less equally split to all domains (using

a user-defined threshold factor). Reports the problem for every

domain that responds to significantly more requests than others.

Using more domains helps browsers more effectively parallelize

downloads because usually browsers have hard-set limits of maximum

parallel downloads per hostname. HTTP 1.1 recommends up to 2 parallel

connections. Popular browsers use up to 6.

|

|

Reduce

DNS Lookups

|

Check if the

number of unique hostnames is less or equal to 5. Using too many

hostnames can cause times needed for resolving a hostname's IP

addresses to be too long. Google recommends up to 5 domains.

|

|

Uncompacted

Resource

|

Detect any extra

whitespaces, comments, or otherwise redundant information, that

could be removed to make the resource smaller and therefore faster

to download. This rule analyzes JavaScript, CSS and HTML files.

|

|

Use

GET for Ajax Calls

|

Detects the XML/HTTP requests that use a request

method other than "GET". According to Yahoo, many browsers

need 2 packets for POST requests, compared to 1 when using GET.

See http://developer.yahoo.com/performance/rules.html.

|

©1999-2013 Zend Technologies LTD. All rights reserved.

![]()

from

the main toolbar.

from

the main toolbar.

![]() again.

again.